- français

- English

Mapify

Motivation

The main idea of the project is to create an interface that will represent the information present on any webpage on a map interface in a beautiful way and provide location and time-based search tools for the user to easily locate information related to a particular region. There are lots of web pages that contain addresses embedded in the text. Some common examples include listing sites and classified pages. However, in most cases, the user has to manually search for locations by copying the address text into Google maps. This is very inefficient and time consuming and also hinders the discovery part of user experience. Mapify will act as a decorator for any web link and put the original content on a map interface. This can also be extended as an iOS app or an android app. Some existing examples of similar concepts include apps like Flipboard (flipboard.com) and Pulse(pulse.me) which take the web content and display them in a unique and well-organized manner.

EXAMPLE USE CASE

Alice wants to eat ice-cream in Lausanne on a Sunday at 7:00 PM. She googles for ice-cream in Lausanne and receives lots of results. She has to click on each link to find out which stores are close to her current location and which are open. Using our website, she will search for the same but the results will be filtered directly according to her current location and time. This way, she can find a relevant place in one step.

Project Milestones

The main milestones of this project are following. These are explained in detail in the later sections of this proposal.

1) Given a webpage, extract geo-coordinates of embedded addresses and opening hours

2) Given a google search query, extract geo-coordinates from top results and provide filters to navigate through results

3) Create mobile apps for the service

*For this project, we want to target only the first two milestones.

Main Modules

Following is a list of the main modules of this project. Each of these components will be explained in detail later.

1) Backend web server - for the purpose of this module, the project will make use of Flask, a lightweight web application framework written in Python. We will host it using Apache and will explore the possibility of using NGINX for performance speed-up.

2) Database of addresses and places - the database will contain map information gathered by the use of OpenStreetMap (OSM), a collaborative project aimed to create a free map of the world, available online at www.openstreetmap.org. It comes with Imposm.geocoder, a library which can be used to convert addresses to coordinates and it depends on PostgreSQL/PostGIS database. Also, Imposm.geocoder makes available its own import tool, Imposm.

3) Indexing engine - We will use an indexing engine to quickly search for keywords from our data set of street names and places. This will act as a pre-cursor step to geo-coding to determine likely candidates for addresses and opening hours.

4) Address resolution API

5) Store hours resolution API

6) Frontend - html, css and javascript

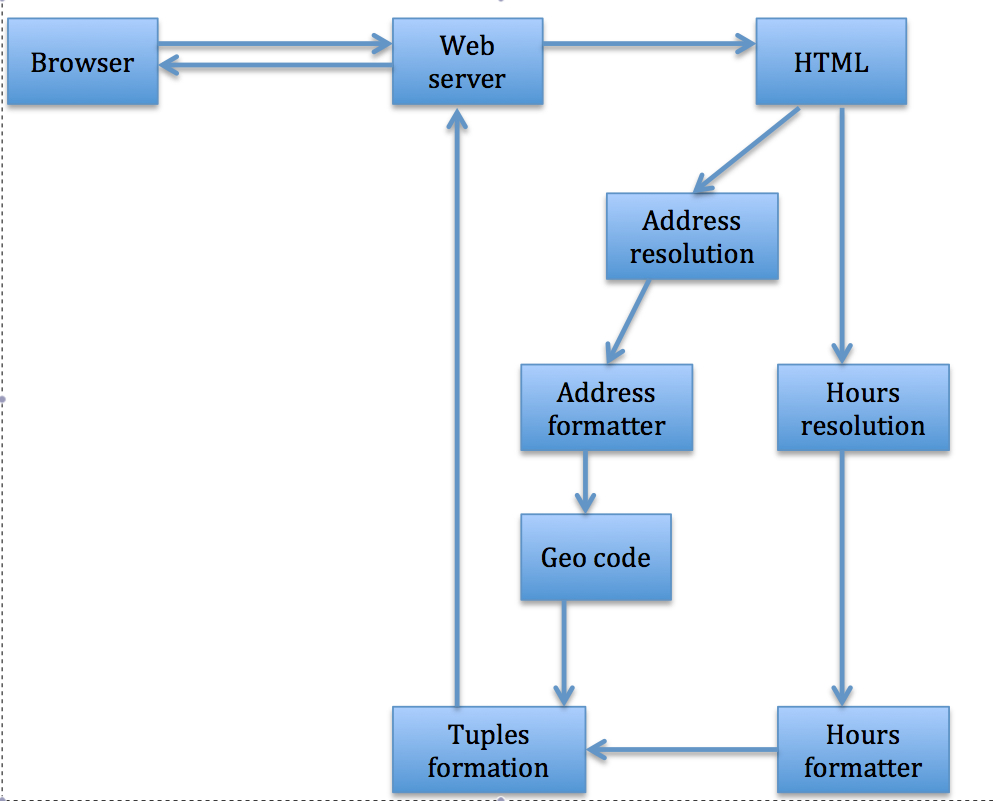

Higher Level Block Diagram

The above figure shows a high-level block diagram of the project. The user of the application requests some information (for example, Alice requesting for an open ice-cream place), which results in a URL being sent to the web-server. The web-server, extracts the HTML source of the URL and looks for the address and opening/closing times of the place in address resolution and hours resolution blocks. The gathered address and time are formatted according to the standard structure using the address and hours formatter. The address is further sent to the Geo-code block, which extracts the geographical co-ordinates corresponding to the formatted address. The geographical co-ordinates and time stamp tuples are then sent back to the webserver. These tuples are shown to the user in a nice GUI on a map, which enables the user to visualize the result of her query. The details of each of these blocks are given in the following discussion.

Data

We will download the OSM data from its download page :- http://planet.openstreetmap.org/ . The data is open-source and available free of cost. The size of data is 4GB for Switzerland and 330 GB for the entire world. Currently, we are planning to build the app for Switzerland only. However, we will build our infrastructure in a scalable fashion so that it can be used to easily extend to other countries. As mentioned above, to handle the data we will use the Imposm.geocoder plugin.

ComputationAL ResouRces

We will need two machines for our project. Here are our preferences :-

1) Operating System - linux

2) HDD - At least, 5 GB hard disk space

3) Root permissions or a virtual environment

Our databases and indexing engines will run in a distributed fashion using both machines to ensure faster performance. However, our code will be designed in such a way that more machines can be easily added whenever required.

FUNCTIONS OF EACH MODULE

Web Server

- Receive URL from browser

- Fetch html of URL

- Send html to address and hours resolution APIs

- Return response back to browser containing structured information tuples (geo-coordinates, opening hours)

Address Resolution

The address resolution API is one of the most important phases of this project. The main purpose of this API is to detect the address information from the HTML source code of the URL. The API would use Machine Learning techniques, such as, Natural Language Processing. The development of this API would require a manual effort to collect different a variety of formats for addresses mentioned in different URLs and then using regular expressions and simple, heuristics to detect these addresses. The design of this API will be such that it results in a fast detection of places for a given query. The address resolution API should also attain a certain level of accuracy, such as, it should be able to detect the entities, which closely resemble the format of an address.

Address Formatter

Before extracting the geographical co-ordinates from the detected addresses, it is important to format the addresses properly. A typical format of address could be: street name, street number, ZIP code, City. The address formatter is responsible to perform this task.

Hours Resolution

This API is similar in function to the address resolution API, except that it detects a list of opening and closing times of a place from a given URL. The API would use Natural Language Processing Techniques and simple heuristics to detect the times from the queries web-page. A challenge in the address and hours resolution API of the project is to differentiate/detect the opening and closing hours of the places depending on the time of the year. For example, most of the places would have different opening and closing timings during Christmas and public holidays, as compared to remaining days of the year.

Hours Detection

The hours detection phase, receives the list of hours from hours resolution API and formats / detects the hours based on simple heuristics. The goal is to present a structured hours information from the received information.

Geographical Co-ordinates extraction

The ultimate goal of this project is to show the opening and closing places relevant to a given query on the map on the GUI, therefore, the extracted addresses from the URLs should be converted to the geographical co-ordinates. For this component, we will use the existing library imposm.geocoder.

Tuples formation

The task of this phase is to connect the addresses and geo-coordinates to their corresponding opening and closing hours.

GUI

The GUI has three main functions:

- Show the original webpage

- Use geo-coordinates and google maps API to show locations on the map

- Provide location-based and time-based filters using AJAX. These filters would enable the users to narrow down their query to a specified region close to their original location and several other simple fucntions.

Technical Challenges

Given that our project aims to gather information existing on a multitude of web sites in different formats, an important risk that we need to take into consideration is the fact that we may not be able to get all the necessary information. In order to keep the users interested in our application, even in the cases that we may not be able to cover (for example, opening hours may be represented in jpg format, or restaurants may have different opening hours depending on time of year) , we will still have to find a way of representing this information and as such, to provide the users with relevant results for their searches.

The project has the following main technical challenges: -

· Large scale indexing of map data for efficient and robust retrieval

· Machine learning techniques for address and entity detection

· Design and implementation of beautiful web interface with appropriate search tools

Team

Team members:

- Amit Gupta

- Anca-Elena Alexandrescu

- Renata Khasanova

- Nauman Shahid

- Marc Bourqui

- Mahsa Taziki

|

|

Timeline

We divide our timeline into 3 different phases :-

1) Infrastructure Phase (upto 2 April ) - In this phase, we will set up all the individual components such as database, web server, index engines, and start the manual work of collecting keywords and regular expressions.

2) Analytics Phase (upto 30 April) - In this phase, we combine the various components together and build analysis tools on top.

3) Testing Phase (upto 13 May) - In this phase, we combine the various components together and perform extensive testing.

|

Team Members |

2nd of April 2014 |

30th of April 2014 |

13th of May 2014 |

|

Anca |

Geo-coding, database backend |

Tuples Formation |

Testing |

|

Amit |

Geo-coding, database backend |

Address resolution |

Testing |

|

Renata |

Index Engine Setup |

Address formatting |

Testing |

|

Marc |

Webserver configuration |

Hours Detection |

Testing |

|

Mahsa |

HTML, javascript, css |

Front-end design + Integration |

Documentation and Testing |

|

Nauman |

Regular expressions, hours resolution |

Hours resolution and Formatting |

Documentation and Testing |